Last time, I discussed the pitfalls of taking on too much work. When heavy workloads lead you to cut corners in the design process, the consequences often come back to haunt you in unexpected ways.

A prime example is a satisfaction survey where every response is a “5 (Highly Satisfied)”—a result of poorly designed evaluations.

If you’re looking at those results and thinking, “100% satisfaction! A huge success!”, it may actually reflect learners rushing through the survey rather than genuine engagement.

In this article, we’ll take a closer look at how to design effective evaluations in instructional design.

Is there substance behind that “satisfaction”?

We’ve all had that moment of relief seeing every single question on the post-training survey marked “5 (Strongly Agree)”. But here lies a major pitfall:

- The temptation to “straight-line” answers: When there are too many questions or the session is running late, learners—desperate to head out—often resort to “straight-lining,” clicking the far-right button all the way down without reading a single word.

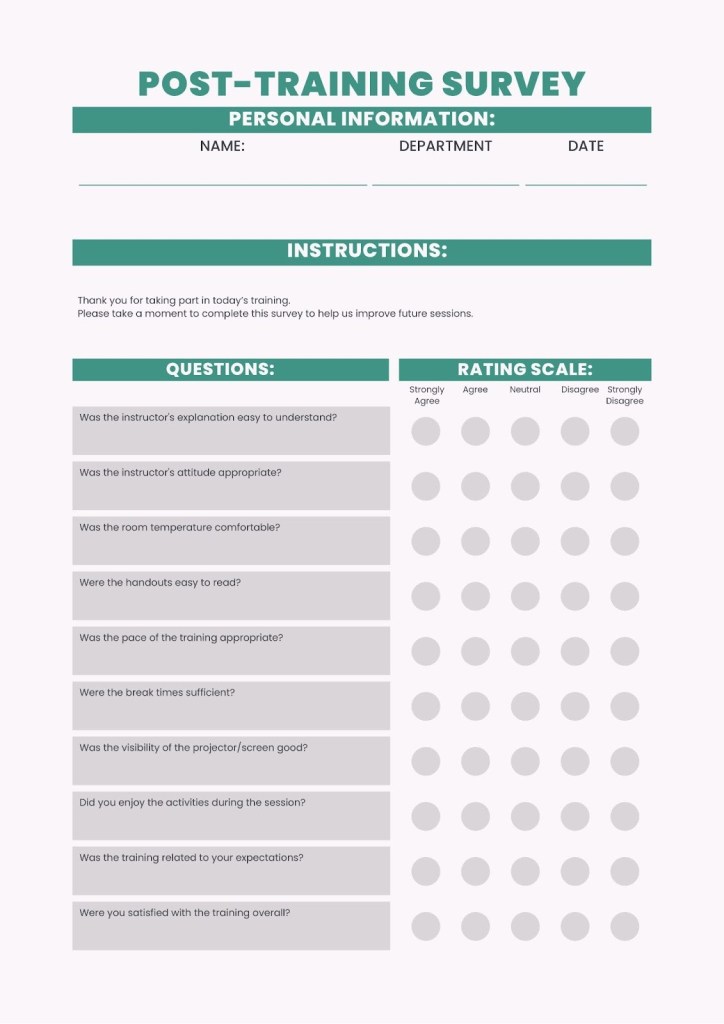

- The Limits of Smile Sheets: Questions like “How was the instructor’s attitude?” or “Was the room temperature comfortable?” fail to reveal the crucial “What did I learn?”

“Straight 5s” can sometimes mean “Nothing special, nothing bad—just forgettable.”

Why Overzealous Surveys Backfire

Are you making your surveys overly detailed in your eagerness to collect more data as an ID? This actually triggers the “all 5s” phenomenon.

For learners, a post-training survey should be a moment for reflection. But when you hit them with 20 or 30 questions about what YOU want to know, it stops being a moment for introspection and becomes nothing more than busywork.

Think about it. What do you want to think about after training ends? Most people don’t want to dwell on the training anymore.

Overloading questions robs learners of valuable output opportunities, ultimately undermining the reliability of your own data.

Redefining the Purpose of “Evaluation”: Measure Change, Not Just Smiles

The goal isn’t just to hand stakeholders a “satisfaction” report to make them feel good. It’s about driving behavioural change.

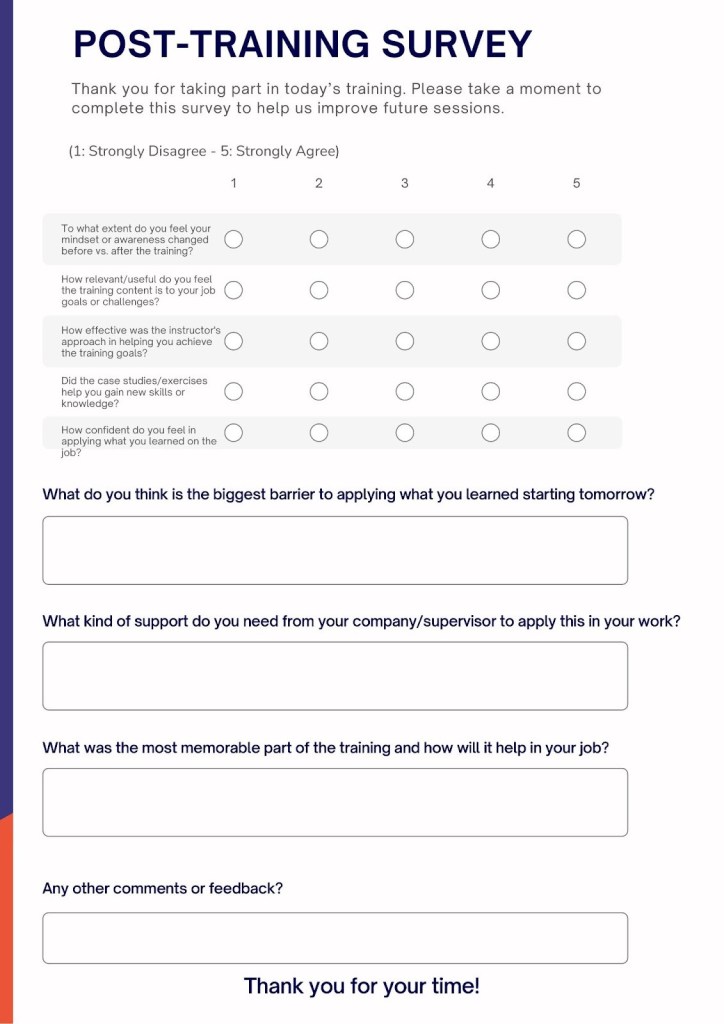

- Refine Your Questions: Beyond 5-point scales or yes/no questions, adding just one open-ended question requiring thought—like “What’s the biggest barrier to using this in your workplace starting tomorrow?” and “What kind of support do you need to apply what you learned in this training to your actual work?”—dramatically improves data quality.

- Look Beyond “Satisfaction”: How do you measure not just learner reactions, but subsequent individual performance and workplace changes? You need to capture evaluation as a line, not a point—through post-program follow-ups or interviews months later.

Summary: True Evaluation Happens in the Field

Being satisfied with an “all 5s” survey result and stopping improvements is the very pitfall instructional designers should guard against.

Excellent evaluation lies not in survey numbers, but in the moment when participants take different actions in the field than before.

Don’t let surveys become mere “tasks.” Listen to the true feelings of learners behind the numbers. Aim for post-training surveys to be not just for that training session, but to become “evaluation designs that lead to the next step.”

【For Corporate Training & ID Solutions, connect with us below.↓↓↓】

→ [Contact Us]

Leave a comment